You may have heard this quote before, or something very much like it. It comes from nuclear physicist Ernest Rutherford (1871-1937), who said, “If you can’t explain your physics to a barmaid it is probably not very good physics.”

With that sentiment in mind, today I’d like to share another quote from the recent hit Sci-Fi novel The Three Body Problem by Cixin Liu. I don’t think I’ve ever seen the difficulties of modern physics summed up so succinctly anywhere else:

Modern theoretical models have become more and more complex, vague, and uncertain. Experimental verification has become more difficult as well. This is a sign that the forefront of physics research seems to be hitting a wall.

In other words, modern physics has become so weird and convoluted that hardly anyone (barmaid or otherwise) can understand it. And that means something is very wrong with modern physics.

Personally, I tend to avoid high-level physics. Part of the reason is that, as a science fiction writer, I’m more concerned with the everyday experiences my characters have to deal with. What’s it really like to walk around on Mars? What sorts of gases might my non-human characters be able to breathe? What could go wrong if some hotshot space pilot tried to fly through the rings of Saturn?

But another part of it is that a lot of high-level theoretical physics stuff—things like string theory, supersymmetry, the multiverse—does sound vague and uncertain. It feels more like guesswork than science. Admittedly, the best guesses of a theoretical physicist are built on a firmer foundation that anything I might think up. But still, for the purposes of science fiction, I feel like I have more leeway to make stuff up with high-level physics than I do with “ordinary” physics like the rocket equation.

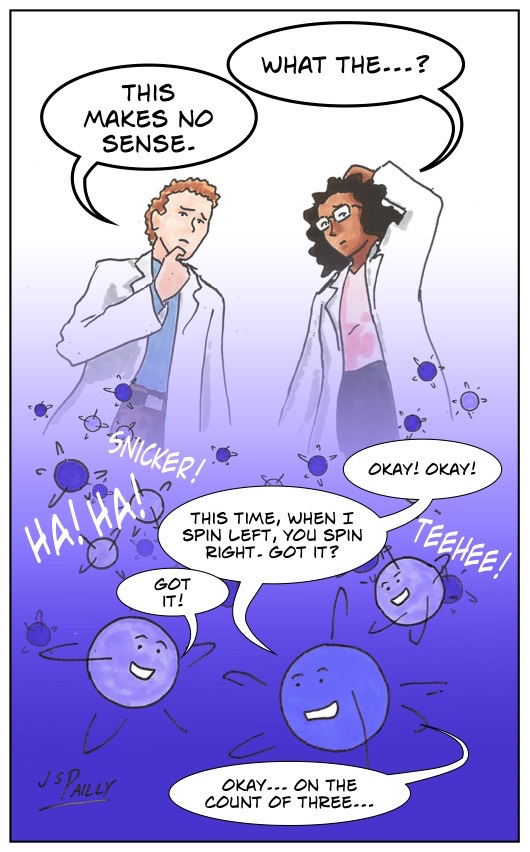

I’ve heard physicists sometimes joke that someone—time travelers, aliens, God, the universe itself—is deliberately messing with us. Maybe that’s why our latest high-tech physics experiments keep producing such confusing results. Maybe that’s why we have to keep resorting to such vague and uncertain theories to explain our discoveries.

And minor spoiler: The Three Body Problem sort of takes that joke and runs with it. But in all seriousness, the forefront of physics does seem to have hit a wall. At least that’s my impression, and I loved how Cixin Liu summed that feeling up in just three quick lines.

It’s easy to get the impression that fundamental physics is at a standstill, but actually a lot of very exciting stuff is happening. e.g.

– verification of the existence of the Higgs boson and confirmation of the standard model

– cosmic microwave background measurements

– the accelerating expansion of the universe

– dark matter

– dark energy

– first observation of gravitational waves

– quantum computers

– cosmic inflation

– nanotechnology, superconductivity and new materials

LikeLiked by 2 people

That’s true. I feel like we might be getting close to a really major breakthrough, like when general relativity and quantum theory came out and all the weird discoveries of the late 19th and early 20th centuries suddenly made sense. At least I hope that’s what’s happening.

LikeLike

Did you say multiverse?

LikeLiked by 1 person

I did! A few years back I read an article in Scientific American that said the excess gravity we attribute to dark matter could be the gravity of neighboring universes interacting with our own. I absolutely loved that idea. There’s probably no way to test it, but it’s still a really cool idea.

LikeLiked by 1 person

Look at the title story of the October issue of Scientific American. It describes a physical problem from the quantum mechanics of solids for which it has been shown that it is undecidable. This means the proof that there are non-computable problems in physics. Here we have a case that is complex enough to show non-computability and simple enough that a proof of this is still possible. I suspect that the notorious three body problem is another instance of this, although I don’t know if there is a proof.

The proof that undecidable properties arise in physics (i.e. that physical theories may contain functions that are not Turing-computable) has interesting philosophical consequences. There is a model of scientific explanations known an as the “DN” (“deductive-nomological”) model introduced by philosophers Carl Hempel and Paul Oppenheim. According to it, scientific explanations of a (a statement describing a phenomenon E to be explained (the “explanandum”) consist of an enumeration of some laws L1, L2, … Lm and some initial conditions C1, C2,… Cn. E is then logically derived (i.e. computed) from the initial conditions and the laws.

There seems to be a tacit assumption among many scientists that the laws of nature are computable, i.e. that for any set of laws one can always derive E for any set of initial conditions using a finite amount of mathematical knowledge (i.e. a finite algorithm). Most people don’t seem to realize that this is just a hypothesis and take it as an a priori truth, but the undecidabilty proof implies this hypothesis is actually wrong. Many people seem to tacitly assume that nature somehow computes. But a real physical system just proceeds according to the physical laws describing it, it does not calculate them. Computability is a property of our descriptions, not of the real objects. It is a term belonging to epistemology, not ontology (and no, we do not live inside a computer simulation).

Non-computability means that with any given set of mathematical methods one can only cover a limited (although possibly infinite) set of cases. It does not mean that one cannot calculate any values but that any method for doing so is incomplete. We may always add additional mathematical methods and thus extend the range of solutions, but the resulting theory (consisting of the physical laws and the mathematical methods to apply them) will always remain incomplete.

So the DN-scheme must be extended: We would not only have the laws and the initial conditions, but also a number of calculation methods M1, M2, … Mk. To derive a particular E from the laws and a given set of initial conditions, we would have to use a particular set of such computational methods. In a computable system, there is, for a given set of laws, a finite set of such methods that covers all cases. We can therefore leave these methods implicit. This is done in the classical DN model, where the calculation is just hinted at by drawing a line under the Cs and Ls and it is implicitly assumed that we know how to compute E from them.

But if the problem is not computable, we may need different calculation methods (Ms) when we change the initial conditions (the Cs, i.e. the case to be calculated). So the physical entity implicitly described by the Laws (the Ls) cannot be described completely in an explicit way by any single formal theory. Formal theories can be constructed for certain sets of Cs and Ls (by providing a set of Ms sufficient for those cases), but in general, the theory of such a physical entity would be computationally incomplete. (So finding a general theory of everything might not be as useful as hoped since it will be computationally incomplete.)

One should note that the classical Popperian notion of falsifiability also becomes problematic here. We can never be sure if all the calculation methods (the Ms) are correct. As a result, we can only falsify the theory consisting of the laws and the calculation methods together, not the laws alone (the laws could be correct while there could be a mistake in our computation methods), and each such theory is special. A falsification of the laws alone is not possible.

The complexity and vagueness that you write about seems to be an inevitable result of some of physics not being computable. Every exact description of a non-computable entity is special (covering only some special cases). So every such description is incomplete. It can be made general by making it vague (vagueness is just another form of incompleteness of a description), by leaving out some detail (i.e. replacing it with an idealized or simplified model) or by introducing (and living with) some error, e.g. by constructing a model that is only an approximation but is not really correct in detail (think of weather simulations, for example). So Scientists should just start to get used to expecting the kinds of complexity scholars in the humanities have been facing all the time. Explicitness and generality of theories are mutually exclusive if the entity described is non-computable, i.e. is not describable completely by a single formal theory. Make the description exact and it becomes special case. Make it general and it becomes vague or approximate. The incompleteness just shifts. In one case, you have a gap in the description, in the other you have vague, i.e. incompletely defined concepts.

But we should not be too sorry about this. Proving that some physical entities are non-computable (I suspect: most complex real-world things belong here), should actually be regarded as the next big break-through in physics because it shows that the situation you describe is inevitable and reality has always more properties than we will ever be able to derive in any single formal theory. We can always extend the theories, but we will never exhaust reality by our theories. There will always remain some surprise. We should not view this as a defect of reality but as a proof that reality is very rich and interesting and will remain that way.

LikeLiked by 4 people

I’m a little behind on reading Scientific American. I just started reading the issue you’re talking about, and I’m only through the “Advances” section in he front. So I haven’t gotten to the article you’re talking about.

But if I understand you, you’re saying the reason modern physics has hit a metaphorical wall is that we still assume the universe operates like a computer. And if we stop making that assumption, maybe physics will finally be able to make real progress. Is that about right?

LikeLiked by 1 person

I think what is required is accepting that not everything can be completely described with formal theories. Incompleteness means you always only get a part of the whole. You can always extend it, but you never get all. I don’t know if physics will make “real progress” but understanding what the limits of knowledge are is, I think, a progress in itself. It is like in mathematics where people like Hilbert had hoped everything could be formalized completely and then Gödel and some others came along and proved it was not possible. Gödel’s work was a breakthrough, but the “Hilbert program”, i.e. the attempt to formalize all of mathematics completely, was dead. So when we move into more complex areas, we should expect science to morph into something that resembles the humanities a little bit, where you never get a complete exact theory but only approximations and vague models.

LikeLiked by 1 person

if there is “intrinsic randomness” built into the universe then I would consider that noncomputable by definition. Since the possibility of intrinsic randomness is pertinent in modern physics, I have to lend credence to your speculation.

LikeLiked by 1 person

There are several factors that might restrict our ability to predict the development of a system. Randomnes is one of them, non-linearity another, openness of systems a third. However, we have a different kind of situation here. If a system is non-computable, it might be completely deterministic. An experiment will always yield the same result. The equations describing the system will have a well-defined solution for each case. However, you would not have an algorithm to calculate the solution for all cases. You might have an algorithm A1 enabling you to calculate the solutions for one subset of cases, but not for another. You can extend the algorithm into A2, A3 etc. each covering more cases, but it would be impossible to cover all cases. However, the physical system would always “know” how to behave. “Know” actually is the wrong metaphor here because what is going on in physical reality is a physical process but not a calculation.

The authors of the article mentioned have devised a (164 page) proof that a certain property of solid state systems (having a band-gap or not) is undecidable. If this proof is correct, this means that physics as a whole is not computable because there is at least one example of a non-computable phenomenon. So this is not a speculation any more. I guess that there are actually many non-computable phenomena (that is, of course, a speculation). I guess that while many simple phenomena are computable, many complex ones are not, although many might be too complex to prove it.

LikeLiked by 1 person

I finally read that article. It definitely stretches the mind a bit. In a way, I kind of find it reassuring to know that some problems have no solutions. I mean, if we can prove that there is no answer, that’s sort of like finding an answer, if that makes sense.

LikeLike

We can always find the answer but never completely. We have to stretch the mind, so to speak 😉

LikeLiked by 1 person

” So when we move into more complex areas, we should expect science to morph into something that resembles the humanities a little bit, where you never get a complete exact theory but only approximations and vague models.”

That pretty much describes the uncertainty principle. The concept goes back at least to Boltzmann, and the idea that systems that are complex and whose inner workings are invisible to us are only knowable statistically.

Mr. Einstein disagreed (God does not play dice) and general relativity and quantum constructions have yet to be reconciled. I’m inclined to agree with Mr. Einstein. The notion that there “is no such thing” as exact position until we observe it seems unbelievably arrogant. It is not clear if salamanders also have the power to collapse the wave function.

LikeLiked by 1 person

You are right. It is unbelievably arrogant. That is why Schrodinger invented his unfortunate cat, to demonstrate the absurdity of the idea that consciousness causes collapse of the wave function. The idea has long since fallen out of favour with physicists, but is unfortunately now firmly embedded in popular culture.

LikeLiked by 2 people

Unfortunately, the popular culture includes many academic physicists whose interests lean toward less philosophical matters. You can still be taught, “it’s a particle if you ping it and a wave if you don’t” in college physics today.

LikeLiked by 1 person

A physicist I know suggested that I should do a Sciency Words post on “observation,” because the word has a very specific definition in quantum physics. It’s not the same as the common dictionary definition.

The problem for me is that I don’t feel I understand the word well enough yet to write about it. But I do get that the word, as used by quantum physicists, is not meant to imply a conscious or intelligent observer. Pretty much any interaction can be considered an observation, I think.

LikeLiked by 1 person

Consider Tom Campbell who claims that a double-slit blind eraser experiment where an instrument measures particles going through the slit, is destroyed, and then scientists check to see an interference pattern – to be expected of a wave. I concur that the conclusions of consciousness collapse theory are absurd but let’s not dismiss it out of hand for that reason.

LikeLiked by 1 person

I’m not familiar with Tom Campbell. I’ll agree that just because a scientific theory seems absurd, that does not necessarily mean it’s false. The universe is not obligated to conform to human reason.

LikeLiked by 1 person

I think a bit of the problem you speak of is in proof coming mathematically rather than physically. I’ve noticed a lot of “theory” being just mathematical based hypothesis in practice. Theorists can use lawful and theoretical equations for the physical world, both macro and micro, to speculate their way to the vague and convoluted stuff and still maintain legitimacy. I find this annoying myself as I will spend some time taking the a physicists word for some idea then come to find the proof is just a mathematical proof and not anything physically verified.

LikeLiked by 1 person

Yeah, from my perspective as a science fiction writer doing research, that’s something I wish would be made clearer. If all we have is a mathematical proof for an idea, that may still be interesting and useful to know about. But if there’s compelling experimental or observational evidence as well, I’m going to be far more interested in what that means for my story worlds.

LikeLike

I think a distinction needs to be drawn between two cases. 1) When a theory is used to make predictions that are purely mathematical, but where the underlying theory has been experimentally validated. Examples: black holes and gravitational waves (predicted by Einstein’s General Theory of Relativity), antimatter (predicted by Dirac’s relativistic quantum theory), the Higgs boson (predicted by the standard model of particle physics.)

2) Purely hypothetical predictions, e.g. supersymmetric particles, magnetic monopoles, string theory, the multiverse.

LikeLike